I had one of those problems to solve, that initially had me toying with blowing the dust of some Python, but in the end it struck me as the perfect time to get more in touch with my Mac’s Command Line a.k.a. The Terminal.

A few years ago I was working with the brilliant Jan Exner, and on a client site was spellbound as he used the command line to completely pacify and coerce a massive SiteCat log file. A few deft, and obscure looking commands, and he had whittled the monster text file into the usable and meaningful snippets (for the problems at hand). He then contorted it, and output into a sequence of secondary files, which became the human-readable data for the analysis that would follow.

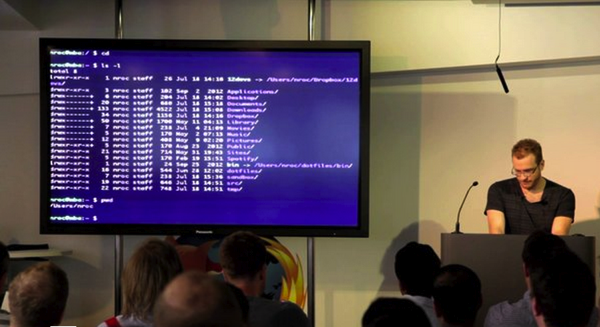

Last month, I went to the second 12Devs event, based out of the Mozilla Hackspace, and of the many great talks, I was really inspired by Paul’s (@nrocy) rapid-fire and completely hands-on (I love talks with live coding!) talk all about “Make Darwin your …..” – The 12dev’s team have made the video available here: https://vimeo.com/72650338 (note: it’s a bit sweary)

I’ll admit that for parts of the solution I used Sublime Text 2’s powerful operators, but I’m pretty sure that next time it’ll be even quicker if I figured out the append/prepend commands.

So, here are the steps in the problem, and the command I used to solve it (I’d happily learn of improvements if anyone has tips to share):

-

parsing a large manifest file

-

extracting the relevant subset of the contents

$ grep “key=value” myfile.txt > criteria1_output.txt

- extracting a specific subpart of that information (that was within double quotes)

$ cat criteria1_output.txt | cut -d'”‘ -f4 > outputIDs.txt

- reconstructing that data into a full URL

I used ST2 amazing multi-caret control, simultaneously editing a 150,000 line file!! (it was a little slow)

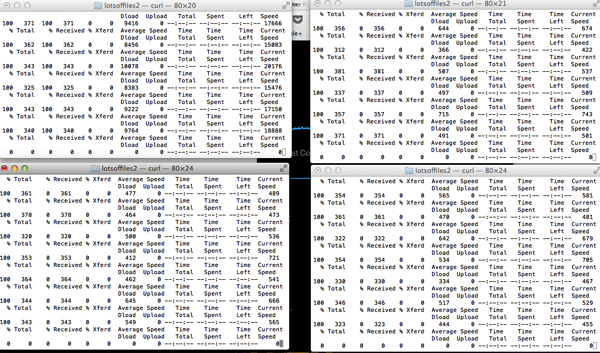

- downloading a local copy of each remote file from the formed URL

$ cat outputIDs.txt | xargs -n1 curl -O

-

analyse each file for a given criteria

-

output the list of matching files into a flat file

[6 & 7 combined]

$ find . -size +5M > OUTPUT.txt

- use that file’s contents to generate the src for the img elements in an html file.

I again used ST2’s amazing multi-caret control, this time editing far fewer lines, instantaneously

I had to utilise multiple terminals running multiple cURLs for quite some time to do all of the downloads (apparently no multi-connections in cURL), but otherwise, my actual hands-on time was probably only 30 minutes, with the majority of that Googling for the necessary commands.