After Saturday’s rather fruitful hour, I set myself the follow up challenge to see how much more could I improve the Web Performance given a second hour.

Last time I took my PageSpeed from 79 –> 88/100

As I have hopefully finished updating my image assets (for now anyway) I increased the Expires headers for my images to access + 1 week in my .htaccess file:

# Media

ExpiresByType image/gif “access plus 1 week”

ExpiresByType image/jpeg “access plus 1 week”

ExpiresByType image/png “access plus 1 week”

Which took my PageSpeed score to 97/100 –er WOW!

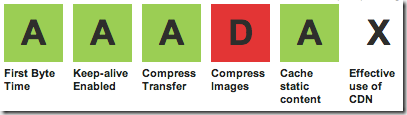

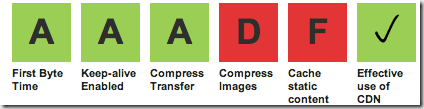

I re-ran webpagetest ::

Fully loaded: 2.780s , requests 43, bytes in: 1076 KB

(as before, there is some variance when rerunning test, but typically between 2.8 – 3.1s), but I’m now looking at the summary graphic:

Oddly, WebPageTest is still not completely happy with my image compressions so I’ll perhaps need to investigate that at some point – however, clearly the biggest negative is that big black X , relating to effective use of CDN.

During the day I routinely leverage Akamai and Level3, but for my side projects I generally make use of Amazon Cloudfront.

Referring back to my previous post on Cloudfront and Origin Pull the steps were:

Create a new subdomain on the site i.e. images.mydomain

Setup new S3 bucket (I like to name it same as the domain)

Configure new Cloudfront distribution, CNAME’d for the specific site

CNAME map the subdomain to the Cloudfront distribution

Make all of the S3 contents ‘public’

I then URL remapped all of the img src’s to use images.mydomain , instead of the relative path they were using for local assets, and thanks to Cloudfront supporting origin pull – hey presto…

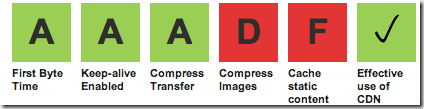

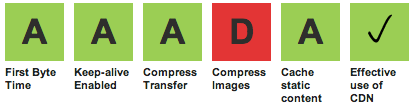

Running WebPageTest again, the results were a mixed bag. A nice green tick for now making effective use of the CDN, but also an indication that the Cache Expires headers for my images were now suboptimal. Bugger.

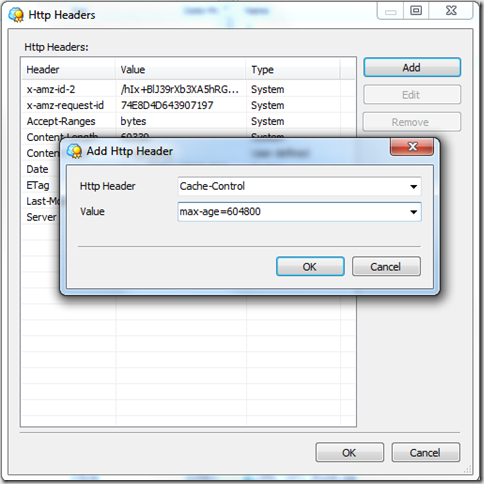

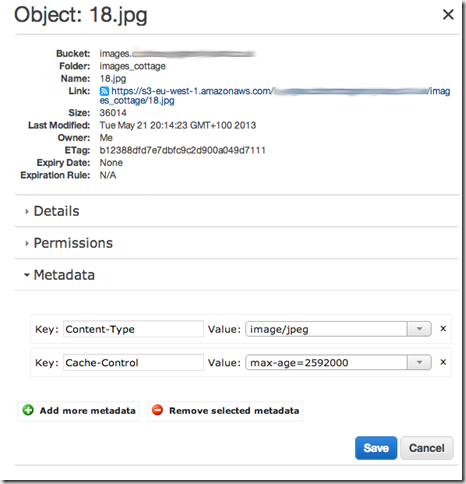

To resolve, I needed to set the Expires Header on my S3 contents.

This is possible via the browser based AWS S3 console, but I prefer to use the rather brilliant Cloudberry S3 Explorer Pro. (unfortunately Windows only).

So having set Cache-control max-age=604800 (i.e. 1 week) I reran WebPageTest, to find that my ‘Cache Static Content grade was still an F. It appears that to get better scores on WebPageTest, you need to set max-age of around a month, so I used Cloudberry to update to:

Cache-control max-age=2592000

Of course, I’ll have to wait a week until my assets expire from the previous cache-control header, before I can verify the results. Another step I need to add to my planned code/build/deploy, will be to support some lite version controlled naming convention so I can avoid this problem of month long expiry headers.

After a slightly more involved second hour:

The PageSpeed improvement was: 88/100 –> 98/100

and my overall WebPageTest grades have moved from:

to

(and I’m hopeful that the grade F will have shown improvement once the cache headers have expired to allow my new max-age).

[Update 2013-05-26] Now that the 1 week expires header has expired, my revised cache-control setting are now live. Re-running WebPageTest is now giving me:

I also spent 10 minutes on a few other minor tweaks PageSpeed : 99/100

– optimise one image I had overlooked (reducing sieze by around 250 bytes)

– minify HTML (using Sublime Text pluging ‘HTML Compressor’)

– leverage browser caching (“access plus 1 week” on CSS and JS)

– specify image dimension

Which leave that pesky Compress Images ‘D’ to address. I plan to undertake some ad-hoc testing of some of the solutions mentioned in the following blog post:

www.netmagazine.com/features/best-image-compression-tools

Next steps: I still need to defer my javascript loading, and look at lazy loading the images for my carousel, and I’m rather looking forward to spending time improving my code/build/deploy workflow – probably starting with getting to know Sublime Text 2 a little better.